|

|

| Anim Biosci > Volume 36(6); 2023 > Article |

|

Abstract

Objective

Iris pattern recognition system is well developed and practiced in human, however, there is a scarcity of information on application of iris recognition system in animals at the field conditions where the major challenge is to capture a high-quality iris image from a constantly moving non-cooperative animal even when restrained properly. The aim of the study was to validate and identify Black Bengal goat biometrically to improve animal management in its traceability system.

Methods

Forty-nine healthy, disease free, 3 months±6 days old female Black Bengal goats were randomly selected at the farmer’s field. Eye images were captured from the left eye of an individual goat at 3, 6, 9, and 12 months of age using a specialized camera made for human iris scanning. iGoat software was used for matching the same individual goats at 3, 6, 9, and 12 months of ages. Resnet152V2 deep learning algorithm was further applied on same image sets to predict matching percentages using only captured eye images without extracting their iris features.

Results

The matching threshold computed within and between goats was 55%. The accuracies of template matching of goats at 3, 6, 9, and 12 months of ages were recorded as 81.63%, 90.24%, 44.44%, and 16.66%, respectively. As the accuracies of matching the goats at 9 and 12 months of ages were low and below the minimum threshold matching percentage, this process of iris pattern matching was not acceptable. The validation accuracies of resnet152V2 deep learning model were found 82.49%, 92.68%, 77.17%, and 87.76% for identification of goat at 3, 6, 9, and 12 months of ages, respectively after training the model.

Of late biometric identification is very vital in this digital era. Animal identification is one of the essential components in traceability. Animal identification facilitates registration of animals covering date of birth, breed information and production record, recording of authorized animal movements, national herd management, payment of appropriate grants, subsidies, insurance claimand a vital tool in animal ownership issue and tracing diseased animals of public and animal health concern [1]. Identification of farm animals is still a challenge at the field level. The traditional methods for animal identification such as ear tagging, branding, and tattooing, toe clipping, ear notching have been widely used but are susceptible to tissue damage, loss or steal [2,3]. Radio frequency identification devices have recently been proposed for traceability purpose [4,5]. But external RF devices are susceptible to theft, tampering, and injury while internal devices are invasive and difficult to maintain. Although DNA based identification system is perfect [6], it requires a long time with the involvement of high cost. Considering the welfare of the animals directly relating with the productivity, the researchers are tempted to use non- invasive, painless biometric method for the identification of the farm animals. The coat pattern of animals is the most recognizable biometric marker. For example, certain body stripes identify zebras and tigers; unique spot patterns recognize cheetahs and African penguins carry etc. Biometrics methods such as retina [7], muzzle [8], face [9] have also been tested in animal identification. The recognition rate of retina, muzzle and face is still unsatisfactory.

Iris recognition may be a new biometric technology for the identification of an individual livestock animal in terms of identification and verification purposes since there is vast pattern variability among different individuals. Daugman [10] was the pioneer in the field of iris recognition. The iris, as an internal (yet externally visible) organ of the eye, remains safe from the environment and remains intact over the time. Iris scanning is a rapid method to capture image digitally. Musgrave and Cambier [11] bagged the patent on system and method of animal identification and animal transaction authorisation using iris patterns. Though iris recognition systems is well developed and already in practice in humans [12,13], it has some inherent problems in animal identification. Unlike human, the animal iris is different in configuration and not circular in shape. It is not possible to use conventional identification techniques to segment, normalise and encode the iris of livestock animal. Capturing a high quality image of iris is one of the major challenges, while the animals are non-corporative and constantly move even when restrained properly at the field conditions. The iris analysis and recognition have been done for cow identification [14,15]. The iris pattern matching has been proposed for recognition of goat recently [16,17]. However, iris pattern matching suggests validation for identification of individual Black Bengal goat at the farmer’s field conditions. There are various deep neural networks that are used earlier for individual identification [18]. Those networks provided the best result for image-based supervised learning techniques. Supervised learning can be defined as a computational technique where the input data is labelled as the desired output. A deep learning-based supervised model is a powerful computational tool for biometric-based individual recognition systems [19,20]. The advantage of deep learning model is to extract features directly from an image. There is no need to extract features individually in a static way for each image. A Convolutional Neural Network (CNN) approach can remove static feature engineering task and extract some important feature which can’t be seen on naked-eye observation in iris images [20]. The feature extraction can be done by a deep neural network using the parameters such as filter size, activation function, max pooling layer etc [21]. Filters help to extract various features from an image according to the filter size. Max pooling layer focuses only on the main features of an image. The activation function can able to omit unnecessary signals from an image to develop a network. The signals are carried by neurons which are main drivers in a deep neural network. A deep learning-based supervised model may be useful for biometric-based individual recognition [18]. Resnet152V2 has been suggested to be the best supervised learning model for gait recognition [22] and emotional differentiation using facial expressions [23]. In the present study, of particular interest was to validate the iris pattern matching by using an artificial intelligence-based deep learning approach for recognition and identification of individual Black Bengal goat (capra hircus) at the farmer’s field conditions.

The experimental protocol and animal care were met in accordance with the National guidelines for care and use of Agricultural Animals in Agricultural Research and Teaching as approved (approval number: V/PhD/2016/07) by the Ethical Committee for Animal Experiments of West Bengal University of Animal and Fishery Sciences, Kolkata- 700037, West Bengal, India.

The Black Bengal goat breed was the experimental animal in the present study. The study commenced with the primary visits to identify individual female goats and their owners. Forty nine healthy, disease free, 3 months±6 days old female Black Bengal goats were randomly selected and identified by a neck tag with certain number at Rangabelia under Gosaba Block in Sunderbans delta, West Bengal, India, located at 22°09′55″N 88°48′28″E with an average elevation of 6 metres above the sea level. Data was collected in the form of iris image from the Black Bengal goats at the age of 3 months and then 3 months interval till they attain an age of 12 months. Thus, image of iris was collected at 3, 6, 9, and 12 months of age from each goat. Auto captured iris images of different Black Bengal goats are demonstrated in Figure 1.

Image acquisition is a process of capturing image which is very important stage to assure the clarity of structure and pattern of iris. The photographic images of the iris were taken using a specialized iris identification camera IriShield – USB MK2120U. The image was captured following the method of Roy et al [17]. The camera had the feature of auto capturing of image. It was operated with an android device. Thus, the camera was connected with a light weighted mobile device through the cable. The inclusion criteria included capturing of iris images within a distance of 5 cm from the sensor. The iris images were captured within a distance of 5 cm from the sensor. The eye-to-camera distance, level of lighting and the amount of reflection were made uniform to reduce the margin of error. As exclusion criteria, eye lid and eye lashes were avoided as much as possible to visualize the whole portion of the iris during capture. The inclusion criteria also included auto capturing of a minimum of 30 images and maximum of 50 images from the left eye of a goat. A total of minimum 5880 iris images (30 images×49 goats×4 occasions) were captured from 49 Black Bengal Goats at 3, 6, 9, and 12 months of age.

Every captured iris image contained some unnecessary parts such as eyelid sclera and pupil. The size of iris image also varied depending on camera to eye distance, illumination level and amount of refection. So, the error was mitigated by cropping the unneeded parts. Images were resized into 700× 650 pixels. Out of 30 to 50 iris images of an individual goat, the best 10 images with maximum coverage of iris area were selected for further processing. Thus, a total of 1960 iris images (10 images×49 goats×4 occasions) were finally processed for matching purpose.

Unlike iris of human, goat iris is rectangular in shape and cannot be fitted in any regular shape. Thus, human iris segmentation algorithm would not work to segment iris image of goat eye. Based on the iris segmentation method of Masek [24], a software iGoat was developed by Roy et al [17]. The demarcating line between the outer boundary and sclera as well as the boundary line between the inner diameter iris and pupils were done. This helped to locate the near rectangular iris area within the eye as shown in Figure 2. In order to find the boundary of pupil and iris, the gray scale image was converted into binary image with proper threshold value initially. The image was stored into matrix form and started searching from the starting element of the matrix and it continued through the image row wise and was assigned the first non-zero pixel as the starting pixel of the boundary. Through the boundary line the tracing was made in clockwise direction, using Moore neighbourhood approach until the starting pixel reached again.

Iris region was normalized in order to remove inconsistency sources like dimensional inconsistencies due to pupil dilation from varying levels of illuminations, varying imaging distance, rotation of camera, head tilt and rotation of the eye at capturing [15]. Normalization yielded same fixed dimension of iris region even after multiple images of same iris under different conditions (Figure 2). The centre of iris region was detected and identified as point and radial vector that passed through the iris region. A total of 240 radial vectors were assigned and 20 data points were selected in each radial vector. For each data point along with each radial line, the Cartesian location was calculated. In the normalized polar representation, intensity values were removed based on the linear interpolation method. In order to compensate the effects of image contrast and illumination, histogram equalization was performed.

Feature encoding was computed convolving the normalized iris pattern with 1D Log Gabor wavelets. The 2D normalized pattern was broken up into a number of 1D signal and then these 1D signals were convolved with 1D Gabor wavelets. The output of filtering was phase quantized to four levels using the method of Daugman [10], with each filter producing two bits of data for each phase. Finally, the encoding process produced a bitwise template of size 480×20 pixels containing some number of bits of information (Figure 2) and a corresponding noise mask which indicated the corrupt areas within the iris pattern, and the bits marked in the template as corrupt.

Hamming distance (HD) employed by Daugman [10] was used as a metric for iris pattern matching and recognition. HD of two templates was calculated, one template was shifted left or right bit-wise and a number of HD values were calculated from successive shifts for matching. Corrections for misalignments in the normalized iris pattern caused by rotational differences during imaging were also done. When two bits patterns were completely independent, such as iris templates generated from different irises, the HD between the two patterns was equal to 0.5. The independent two bit patterns were totally random, so there was 50 percent probability of setting any bit to 1, and vice-versa. Therefore, half of the bits would agree and half would disagree between the two patterns. When two patterns were derived from the same iris, the HD between them was close to 0 as they were highly correlated. From the calculated HD values, only the lowest was taken, since this determined the best match between two templates. Iris pattern matching was first performed among ten iris images of individual animal at 3, 6, 9, and 12 months of age to authenticate the same animal. The cut off value of 55% was set as minimum threshold matching percentage. Thus, the threshold matching 55% or above signified that the images were from the same Black Bengal goat [17]. Further, iris pattern matching was carried out between animals to distinguish different Black Bengal goats at different times. The threshold matching below 55% between two animals proved that these two Black Bengal goats were different in biometric identity.

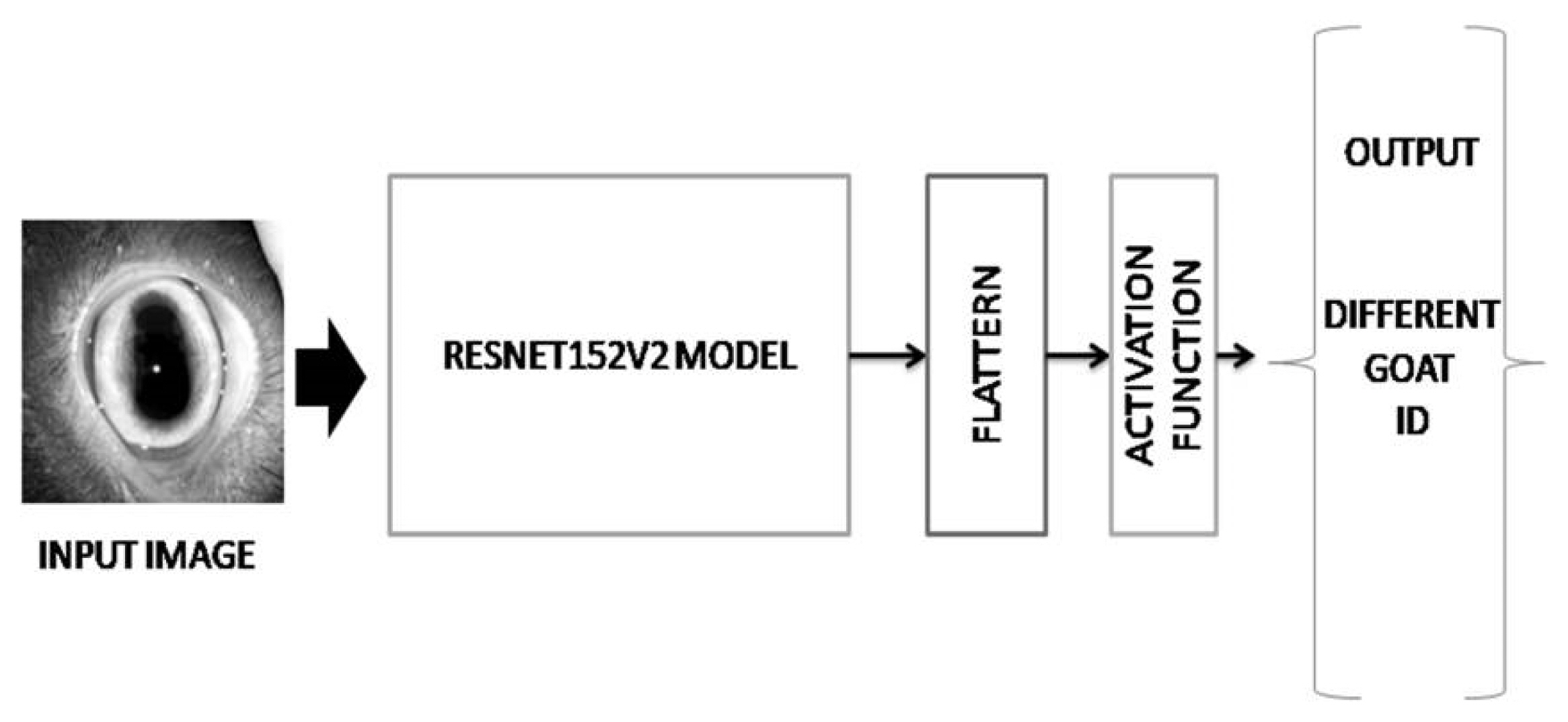

Resnet152V2 deep learning model was applied to process the eye images of goats covering different ages for individual identification. Recently four architectures for training the models: VGG16, ResNet152V2, InceptionV3, and DenseNet201 have been used to classify segments of a sheep using an image dataset of 512 images from 32 sheep for superpixels classification and segmentation of sheep in view of animal tracking and weight prediction [25]. A self-supervised deep learning module has been implemented to accurately recognize Chengdu ma goats [26]. Deep learning based identification model considers an image as input and processes it through different layers, the convolutional layer, the pooling layer, the ReLU activation layer and the fully-connected layer. The model was constructed with various convolution layers, max pooling layers and combination of skip connections. The convolution layer is used to extract the features of an input image in a matrix form. A filter matrix is applied in the convolution layer to extract the features of the input image. The equation of convolution layer as follows.

If a m×m filter ω is used, the convolutional layer output will be of size (N−m+1)×(N−m+1). In this case, the contributions (weighted by the filter components) need to be summed up from the previous layer cells.

There is various kind of filter matrix available to extract the features such as edge filter, shape filter etc. Both the filter matrix and convolution layer are depended on the kernel size of a layer. The features are extracted according to the kernel size of a layer. Max pooling layer is used to focus maximum vector of a feature matrix according to their kernel size. It can help to focus most important region of an image. Activation layer is responsible to normalize the information and omit the dead information. Finally, the fully connected layers flatten all information to a 1-D matrix and the information is used to predict the actual output of the input image. The model has number of output terminals same as the number of classes to be classified. In this problem, individual goat has to be identified so that each output terminal can represent identification number of individual goat present in a particular age group.

The dataset was created for the training model according to age of individual goat. Figure 1 demonstrated previously how the dataset was created for training the model. Figure 3 demonstrated how the proposed model worked using Resnet152V2 deep learning model. In Figure 4, the model has taken the eye image of a goat from training set and processed it to produce predicted output. An iris pattern consist image was fed into the deep learning model. The deep learning model extracted features from the input image and the extracted features were analysed through the model to find the best matching result from training samples. The high confidence value of one goat was selected as the output result of the model. The output terminals have produced values from 0 to 1. The value of output terminal corresponding to the particular goat has been taken during training of the model and the model has been iterated until the corresponding output terminal has reached above threshold values. The model extracted all features from an image and flattened all information into 1D matrix. The acquired information was subjected to softmax activation function in the last layer for identifying an individual goat ID. The captured eye’s image was divided into 85:15 as training image set and validation image set, respectively. The network was trained with all images from training set and the trained network was tested on test image sets. In test, the unknown eye image has been given to the trained model and the terminal with maximum output value has been selected as predicted output. The predicted goat id has been obtained based on the selected terminal with maximum value.

Accuracy is one metric for evaluating classification models. Train accuracy (Train acc) is the performance of model that how a model will be learnt based on training dataset. As the output of every training input is known to the model, the weight is adjusted until tolerance of error or maximum epoch is reached. Validation accuracy (Val acc) is the prediction accuracy of the model after training where the output against the input is not known to the model. The prediction is cross-checked by the known result and percentage of the validated actual result is returned. The model performs well if validation accuracy is almost same the training accuracy i.e., the model can predict every unknown input correctly. The following formula was applied to calculate accuracy.

Mean iris pattern matching of individual Black Bengal goat covering 49 goats at 3, 6, 9, and 12 month of age is presented in Table 1 (See also Supplementary Table S1). Mean iris pattern matching of individual 49 goats was ≥55% over the time indicating generation of iris templates from the same iris of a particular goat.

Mean (±standard error) of iris pattern matching percentages of best images (covering maximum iris without glair) from ten representative goats at 3, 6, 9, and 12 months of age is shown in Table 2 (See also Supplementary Tables S1 to S5). While iris pattern matching was compared between two goats over the time, it was always ≤55% indicating generation of iris templates from different irises of two goats.

The overall identification accuracies of iris pattern matching and losses from each age group are presented in Table 3. The accuracies of template matching of goats at 3, 6, 9, and 12 months of ages were recorded as 81.63%, 90.24%, 44.44%, and 16.66%, respectively. The accuracies of iris pattern matching for the goats at 9 and 12 months of ages were low and below the minimum threshold matching percentage.

The accuracies of eye image matching of goats at various ages for individual recognition are shown in Table 4. The maximum accuracies were 95.73% (training) and 92.68% (validation). The best accuracy of eye image matching for individual recognition was recorded at 6 months of age. The lowest accuracy of eye image matching for individual recognition was registered at 9 months of age.

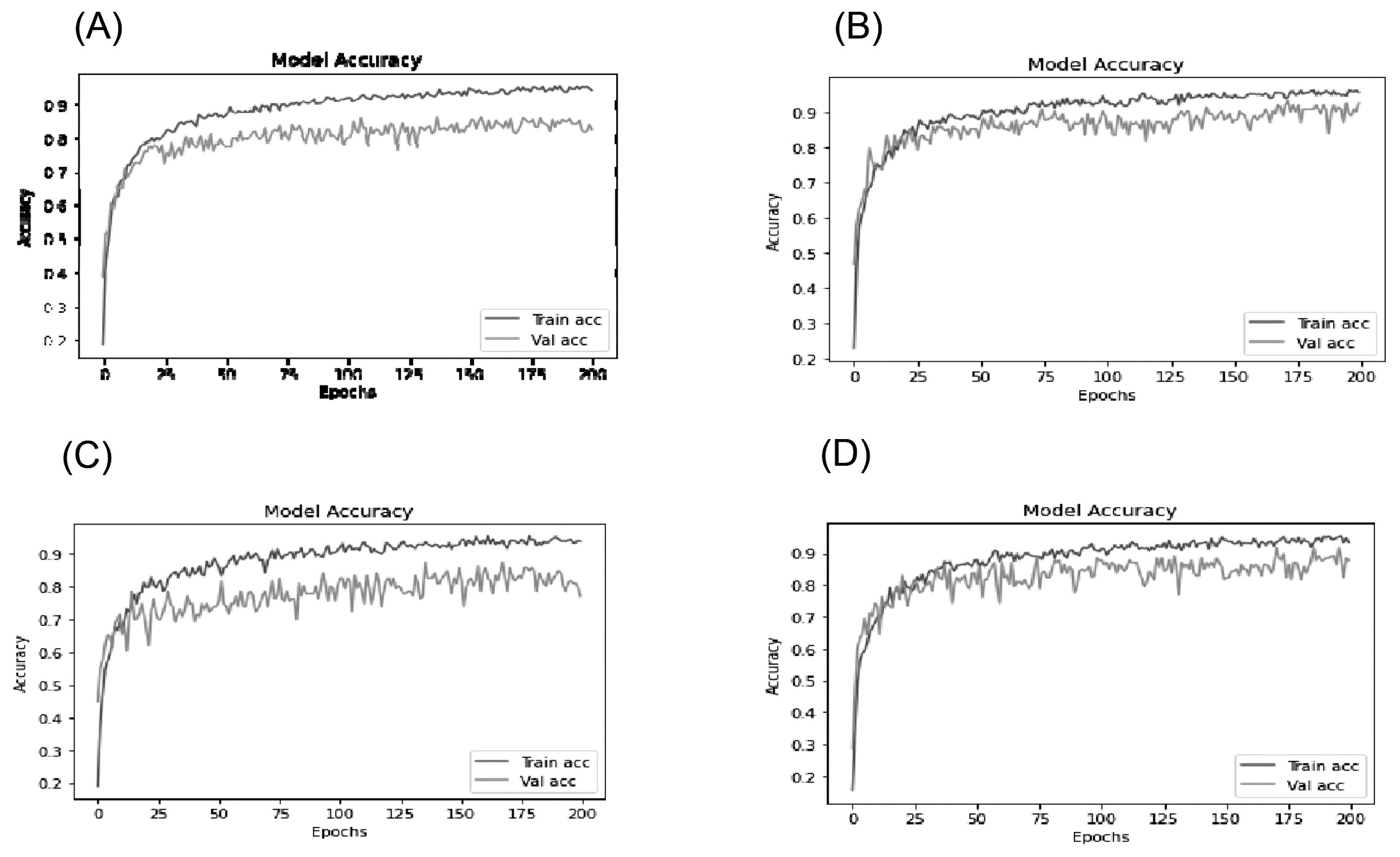

The performance analysis of Resnet152V2 deep learning model for different ages is demonstrated in Figure 5 (A–D). The figures show how the learning of the model is changing during each epoch. The learning rate for 6 months of age was the best as compared to learning rates registered for other ages. The graphs demonstrate the accuracy of the final model performance. The X-axis is represented the Epochs and the Y-axis is given the accuracy of the model respectively. Epochs can be defined as number of processes used to learn from the training dataset i.e. how many number of times the learning algorithm works through the whole training dataset. For every epoch, the model is being regularized the weight values and prepared the model according to the training dataset. The weight of the model is changed with respect to every epoch and thus the accuracy of the model is also changed accordingly.

Iris is a thin rectangular structure lies between cornea and the lens of the goat’s eye. Formation of the unique patterns of the iris is random and not related to any genetic factors [27]. As an internal organ (though visible externally) of the eye, the iris is well protected from the environment and remain stable over time and thus the iris pattern remains almost same from birth to death, unless otherwise there is any injury to the iris. Iris architecture is not only complex but also unique to an individual. The iris pattern of each eye is considered as a unique biometric feature [28]. Due to the fact that the two eyes of an individual contain completely independent iris patterns, the iris pattern of left eye of all goats was investigated in the present study. Iris pattern matching and recognition has been reported in goats at a particular age [16,17]. The minimum pattern matching among iris templates of same goat was reported as 59% [17]. In the present study, mean iris pattern matching of 49 Black Bengal goats at 3, 6, 9, and 12 month of age was between 63.71% and 61.45% (Table 1), which was slightly higher than the previous iris pattern matching percentage (59%) in Black Bengal Goats [17]. The present study showed that iris pattern of Black Bengal Goats remained same over the time right from 3 months (kid stage) to 12 months of age (mature stage).

Classification model for identifying different Indian goat breeds has been reported Mandal et al [29]. In earlier studies, iris pattern matching for recognition was proposed based on a small number of goats (≤5) maintained at organized farm and subsequently a limited number of iris image database [16,17]. In the present study, iris pattern matching was performed using 1960 iris image template database to validate the earlier claim in more number of goats and figure out whether this biometric identification technique could be useful at the farmer’s field conditions. The maximum pattern matching among iris templates of different goats was 54% [17]. The present results were comparatively accurate and thus the threshold matching below 55% between two goats over the time suggested that they were different in biometric identity (Table 2).

The accuracies of template matching of goats at 3, 6, 9, and 12 months of ages were recorded as 81.63%, 90.24%, 44.44%, and 16.66%, respectively (Table 3). The capture of iris image using auto captured human iris scanner from the goats under uncontrolled situations at the farmer’s field and thus the movement of eye of an individual goat might be the reason for recording the lower accuracies of iris pattern matching for the goats at 9 and 12 months of ages. The gap between matching and mismatching was narrow as the image acquisition was the main challenge in animals due to uncontrolled behaviour. The proper focusing of the image was not possible. The gap could be increased if the goats could be restrained and the iris images could be captured under controlled environment. In the present study, goat iris image was taken using IriShieldTM – USB MK2120U made for capturing human iris image since no iris scanner or specific camera for taking goat iris image was available.

In the present study, the learning rate for Resnet152V2 deep learning model has been used for developing the individual goat identification model based on eye images. The training accuracies and validation accuracies of eye image matching of goats covering different ages ranged between 95.73% and 93.38%, 92.68%, and 77.17%, respectively (Table 4). The result showed that deep learning techniques could be able to recognize individual goat using eye images. After training, the image set of any goat could be identified easily using this deep learning model. Iris texture pattern is determined during fetal development of the eye and unchangeable to age and thus considered as a unique biometric feature [30]. The best accuracy (both training and validation) of eye image matching for individual recognition was recorded at 6 months of age. The chances of over fitting due to less number of dataset availability for 9 months age might be the cause for the lowest accuracy (validation) of eye image matching for individual recognition at 9 months of age. The result of deep learning based eye image matching was more accurate than the iris pattern matching system. The iris pattern recognition has some advantages for exploring more accurate and effective iris feature extraction algorithms under various conditions [31,32]. It was also noted that the iris images of some goats could not satisfy the threshold value at matching using template. However, the deep learning could not allow the threshold value for selection. The deep learning methods, especially the CNN-based methods have accomplished substantial achievement in iris recognition [33,34] and achieved superior performance than the classic iris matching method [35].

The present study suggests that the deep learning-based approach may be able to provide the best accuracies for the biometric identification of Black Bengal goat. It is a non-invasive biometric technique for identification of a goat using automatically captured iris image. Deep learning technique can be implemented as an automated system for individual identification. Deep learning based approach is more cost effective and time effective process than any other processes.

Notes

ACKNOWLEDGMENTS

The authors would like to express their gratitude to Dr. A. Bandopadhyay, Senior Consultant, ITRA, Ag&Food, Government of India for perceiving the concept of the research work. The authors are thankful to Dr. Manoranjan Roy, Principal Investigator of All India Coordinated Research Project on Goat Improvement and Assistant Professor, Animal Genetics and Breeding, West Bengal University of Animal and Fishery Sciences, Kolkata- 700037, West Bengal, India for extending necessary supports to collect the data at Rangabelia, Gosaba Block, Sunderbans delta, West Bengal, India. The necessary help and cooperation extended by Mr. Kaushik Mukherjee, Mr. Sanket Dan, Mr. Kunal Roy, Mr. Subhranil Mustafi, Mr. Subhojit Roy and Mr. Pritam Ghosh, Department of Information Technology, Kalyani Government Engineering College, Kalyani, Nadia- 741235, West Bengal, India are duly acknowledged.

SUPPLEMENTARY MATERIAL

Supplementary file is available from: https://doi.org/10.5713/ab.22.0157

Supplementary Table S1.

Iris pattern matching percentage of Black Bengal goats at 3, 6, 9, 12 months of age

ab-22-0157-Supplementary-Table-1.pdf

Supplementary Table S2.

Iris pattern matching percentages of best images from ten goats at 3 month of age

ab-22-0157-Supplementary-Table-2.pdf

Supplementary Table S3.

Iris pattern matching percentages of best images from ten goats at 6 month of age

ab-22-0157-Supplementary-Table-3.pdf

Supplementary Table S4.

Iris pattern matching percentages of best images from ten goats at 9 month of age

ab-22-0157-Supplementary-Table-4.pdf

Supplementary Table S5.

Iris pattern matching percentages of best images from ten goats at 12 month of age

ab-22-0157-Supplementary-Table-5.pdf

Figure 1

Auto captured iris images of different Black Bengal goats using a specialized iris identification camera IriShield – USB MK2120U which is connected with a light weighted mobile device through the cable for capturing iris images within a distance of 5 cm from the sensor. Eye lid and eye lashes are avoided as much as possible to visualize the whole portion of the iris during capture.

Figure 2

Image capturing, image pre-processing, iris localization and segmentation to locate the near rectangular iris area, iris normalization to remove inconsistency sources and finally generation of iris biometric template of size 480×20 pixels containing some number of bits of information.

Figure 3

The architecture of the system using Resnet152V2 model demonstrates that the convolution layer is used to extract the features of an input image in a matrix form. A filter matrix is applied in the convolution layer to extract the features of the input image. There are various kind of filter matrix is available to extract the features such as edge filter, shape filter etc. The filter matrix and convolution layer depend on the kernel size matrix of a layer. The features are extracted according to the kernel size of a layer. So the kernel size is responsible for the extracted features from an image. Max pooling layer has been used to focus maximum vector of a feature matrix according to their kernel size. It helps to focus most important region of an image. Activation layer is responsible to normalize the information and omit the dead information. Finally, the fully connected layer flattens all information to a 1-D matrix and the information is used to predict the actual output of the input image.

Figure 4

The process of deep learning based identification model demonstrates the verification and identification workflows. An input image is fed into the approached deep learning model and the model processes input eye image of a goat from training set and identify goats with different ID numbers as predicted outputs.

Figure 5

Accuracy of Resnet152V2 deep learning model for 3 months age (A), 6 months age (B), 9 months age (C), and 12 months age (D) demonstrate the accuracy of the model performance which changes during each epoch. For every epoch, the model is being regularized the weight values and prepared the model according to the training dataset. The weight of the model changes with respect to every epoch and thus the accuracy of the model changes accordingly.

Table 1

Iris pattern matching percentage of Black Bengal goats at 3, 6, 9, 12 months of age (n = 49)

| Item | Iris pattern matching (%) | |||

|---|---|---|---|---|

|

|

||||

| 3 months | 6 months | 9 months | 12 months | |

| Mean | 63.71 | 61.45 | 62.62 | 62.49 |

| SD | 6.87 | 4.87 | 6.52 | 5.81 |

| SE | 0.98 | 0.70 | 0.93 | 0.83 |

Table 2

Mean (±standard error) of iris pattern matching percentages of best images from ten goats over 3, 6, 9, 12 months of age

REFERENCES

1. Bowling MB, Pendell DL, Morris DL, et al. Identification and traceability of cattle in selected countries outside of North America. Pro Anim Sci 2008; 24:287–94.

2. Edwards DS, Johnston AM, Pfeiffer DU. A comparison of commonly used ear tags on the ear damage of sheep. Anim Welf 2001; 10:141–51. https://doi.org/10.1017/S0962728600023812

3. Gosalvez LF, Santamarina C, Averos X, Hernandez-Jover M, Caja G, Babot D. Traceability of extensively produced Iberian pigs using visual and electronic identification devices from farm to slaughter. J Anim Sci 2007; 85:2746–52. https://doi.org/10.2527/jas.2007-0173

4. Regattieri A, Gamber M, Manzini R. Traceability of food products: general framework and experimental evidence. J Food Eng 2007; 81:347–56. https://doi.org/10.1016/j.jfoodeng.2006.10.032

5. Sahin E, Dallery Y, Gershwin S. Performance evaluation of a traceability system. IEEE T Syst Man Cy B 2002; 3:210–8.

6. Loftus R. Traceability of biotech-derived animals: application of DNA technology. Rev Sci Tech Off Int Epiz 2005; 24:231–42. https://doi.org/10.20506/rst.24.1.1563

7. Allen A, Golden B, Taylor M, Patterson D, Henriksen D, Skuce R. Evaluation of retinal imaging technology for the biometric identification of bovine animals in Northern Ireland. Livest Sci 2008; 116:42–52. https://doi.org/10.1016/j.livsci.2007.08.018

8. Barry B, Gonzales-Barron UA, Mcdonnell K, Butler F, Ward S. Using muzzle pattern recognition as a biometric approach for cattle identification. Trans ASABE 2007; 50:1073–80. https://doi.org/10.13031/2013.23121

9. Corkery GP, Gonzales-Barron UA, Butler F, McDennell K, Ward S. A preliminary investigation on face recognition as a biometric identifier of sheep. Trans ASABE 2007; 50:313–20. https://doi.org/10.13031/2013.22395

10. Daugman J. How iris recognition works. In : Proceedings of International Conference on Image Processing 1; 2002 Sept 22–5; Rochester, NY, USA.

11. Musgrave C, Cambier JL. System andmethod of animal identification and animal transaction authorization using iris pattern. Moorestown, NJ, USA: Iridian Technologies, Inc; 2002. US Patent. 6424727

12. Daugman J. New methods in iris recognition. IEEE T Syst Man Cy B 2007; 37:1167–75. https://doi.org/10.1109/TSMCB.2007.903540

13. Feng X, Ding X, Wu Y, Wang PSP. Classifier combination and its application in iris recognition. ntern J Pattern Recognit Artif Intell 2008; 22:617–38. https://doi.org/10.1142/S0218001408006314

14. Zhang M, Zhao L. An iris localization algorithm based on geometrical features of cow eyes. In : Proc. SPIE 7495, MIPPR 2009: Automatic Target Recognition and Image Analysis, 749517; 30 October 2009; https://doi.org/10.1117/12.832494

15. Lu Y, He X, Wen Y, Wang PSP. A new cow identification system based on iris analysis and recognition. Int J Biom 2014; 6:18–32. https://doi.org/10.1504/IJBM.2014.059639

16. De P, Ghoshal D. Recognition of non circular iris pattern of the goat by structural, statistical and fourier descriptors. Procedia Comput Sci 2016; 89:845–9. https://doi.org/10.1016/j.procs.2016.06.070

17. Roy S, Dan S, Mukherjee K, et al. Black Bengal Goat Identification using Iris Images. Pro Int Con Front Com Sys 2020. p. 213–24. Bhattacharjee D, Kole DK, Dey N, Basu S, Plewczynski D, editorsProceedings of International Conference on Frontiers in Computing and Systems. Advances in Intelligent Systems and Computing2022. 1255:Springer; Singapore: https://doi.org/10.1007/978-981-15-7834-2_20

18. Sundararajan K, Woodard DL. Deep learning for biometrics: a survey. ACM Comput Surv (CSUR) 2019; 51:1–34. https://doi.org/10.1145/3190618

19. Minaee S, Abdolrashidi A. Deepiris: Iris recognition using a deep learning approach. arXiv 2019.1907:09380 https://doi.org/10.48550/arXiv.1907.09380

20. Nguyen K, Fookes C, Ross A, Sridharan S. Iris recognition with off-the-shelf CNN features: A deep learning perspective. IEEE Access 2017; 6:18848–55. https://doi.org/10.1109/ACCESS.2017.2784352

21. Nielsen MA. Neural networks and deep learning. 25:San Francisco, CA, USA: Determination Press; 2015.

22. Apostolidis K, Amanatidis P, Papakostas G. Performance evaluation of convolutional neural networks for gait recognition. In : 24th Pan-Hellenic Conference on Informatics; 2020; p. 61–3.

23. Hwooi SKW, Loo CK, Sabri AQM. Emotion differentiation based on arousal intensity estimation from facial expressions. Information science and applications. Singapore: Springer; 2020. p. 249–57.

24. Masek L. Recognition of human iris patterns for biometric identification [master’s thesis]. Crawley, WA, Australia: School of Computer Science and Software Engineering, the University of Western Australia; 2003.

25. Sant'Ana DA, Pache MCB, Martins J, et al. Computer vision system for superpixel classification and segmentation of sheep. Ecol Inform 2022; 68:101551https://doi.org/10.1016/j.ecoinf.2021.101551

26. Pu J, Yu C, Chen X, Zhang Y, Yang X, Li J. Research on Chengdu Ma goat recognition based on computer vison. Animals 2022; 12:1746https://doi.org/10.3390/ani12141746

27. Wildes RP. Iris recognition: an emerging biometric technology. Pro IEEE 1997; 85:1348–63. https://doi.org/10.1109/5.628669

28. Prajwala NB, Pushpa NB. Matching of iris pattern using image processing. Int J Recent Technol Eng 2019; 8:2S1121–3. https://doi.org/10.35940/ijrte.B1004.0982S1119

29. Mandal SN, Ghosh P, Mukherjee K, et al. InceptGI: a convnet-based classification model for identifying goat breeds in India. J Inst Eng India Ser B 2020; 101:573–84. https://doi.org/10.1007/s40031-020-00471-8

30. Bowyer KW, Hollingsworth K, Flynn PJ. Image understanding for iris biometrics: a survey. Comput Vis Image Underst 2008; 110:281–307. https://doi.org/10.1016/j.cviu.2007.08.005

31. Benalcazar DP, Zambrano JE, Bastias D, Perez CA, Bowyer KW. A 3D iris scanner from a single image using convolutional neural networks. IEEE Access 2020; 8:98584–99. https://doi.org/10.1109/ACCESS.2020.2996563

32. Vyas R, Kanumuri T, Sheoran G, Dubey P. Smartphone based iris recognition through optimized textural representation. Multimed Tools Appl 2020; 79:14127–46. https://doi.org/10.1007/s11042-019-08598-7

33. Hamd MH, Ahmed SK. Biometric system design for iris recognition using intelligent algorithms. Inter J Educ Mod Comp Sci 2018; 10:9–16. https://doi.org/10.5815/ijmecs.2018.03.02

34. Jayanthi J, Lydia EL, Krishnaraj N, Jayasankar T, Babu RL, Suji RA. An effective deep learning features based integrated framework for iris detection and recognition. J Ambient Intell Humaniz Comput 2020; 12:3271–81. https://doi.org/10.1007/s12652-020-02172-y

35. Daugman JG. High confidence visual recognition of persons by a test of statistical independence. IEEE Trans Pattern Anal Mach Intell 1993; 15:1148–61. https://doi.org/10.1109/34.244676

- TOOLS

PDF Links

PDF Links PubReader

PubReader ePub Link

ePub Link Full text via DOI

Full text via DOI Download Citation

Download Citation Supplement1

Supplement1 Print

Print